AI Assistant

| Component | Type | Description | |

|---|---|---|---|

| AI Assistant | 🔀 action | Perform an action using LLMs |

The AI Assistant allows you to make a request to a selected LLM model to perform actions and provide an output. Examples use cases include:

- asking the AI to output a customized email body using placeholder values from a form

- asking the AI to extract text from uploaded images

- asking the AI to write custom code that is then inputted into the Run Code component

- asking the AI to output a value that is then used by the branch paths and conditional paths component to route the workflow in a certain way

Models

The following models are currently available in the assistant:

- gpt-4o-mini (default)

- gpt-4o

- gpt-4-vision (legacy)

- gpt-4-turbo (legacy)

- gpt-3.5-turbo (legacy)

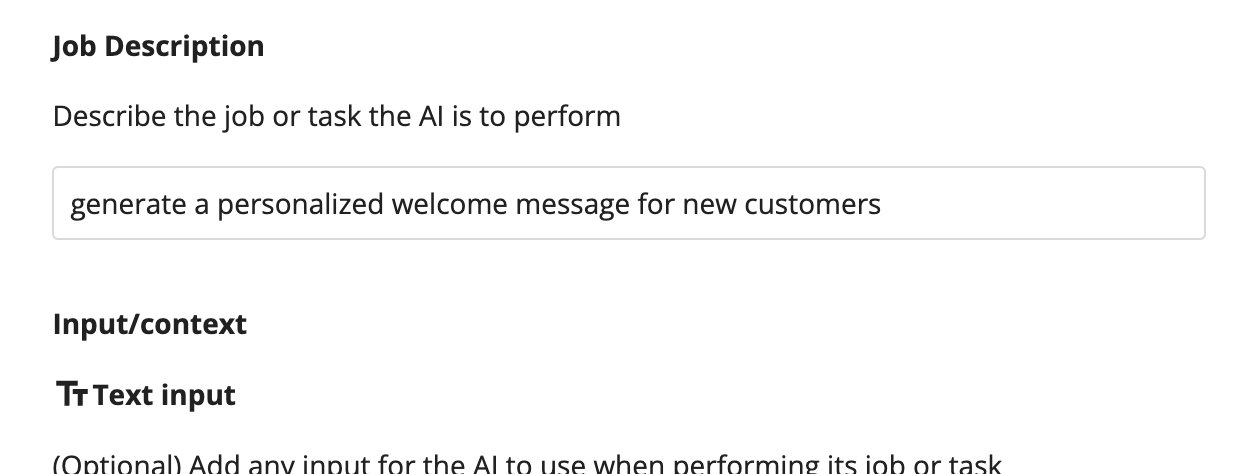

Job description

Provide a job description for the AI assistant. This helps it better understand it purpose and function and how it should handle both the inputs and generate the specified outputs.

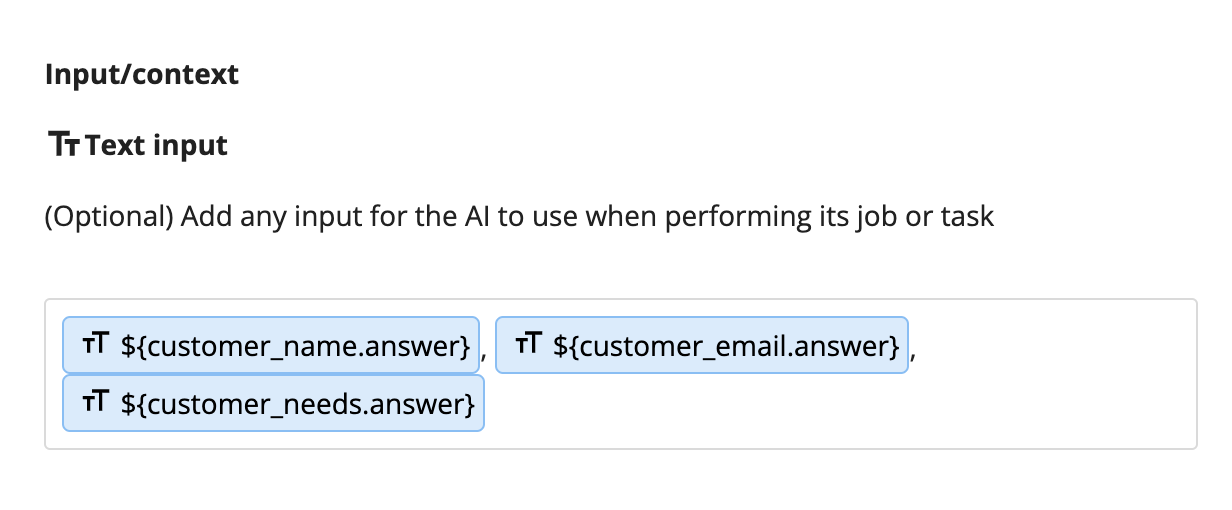

Context

Insert any text content to be used by the AI when performing its job. This can be text, placeholders or a combination of both. The AI will use this as context when performing its job and generating the outputs.

Image inputs

For models with vision, you can use image URLs as an input. For example, if the user has uploaded an image from a form, you can use the placeholder for that question as an input.

Image inputs must be an image URL. You can pass in multiple image URLs, including a list of image URLs. If multiple image URLs are passed in, each image will be sent to the AI in the same request.

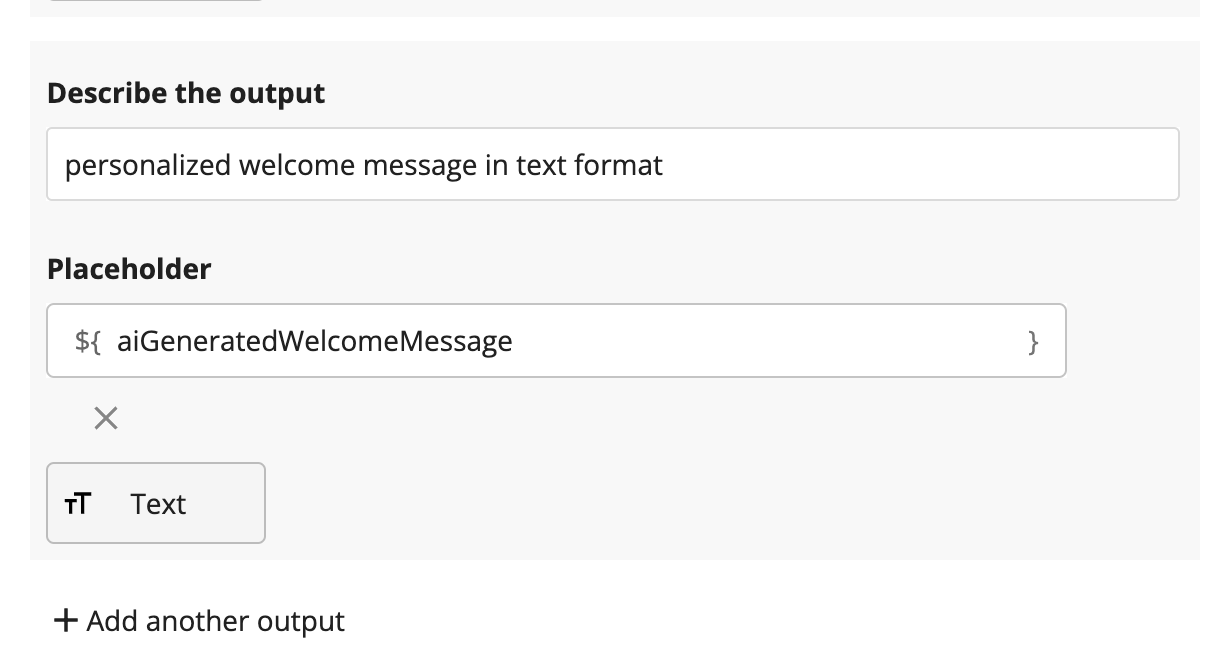

Setting the output

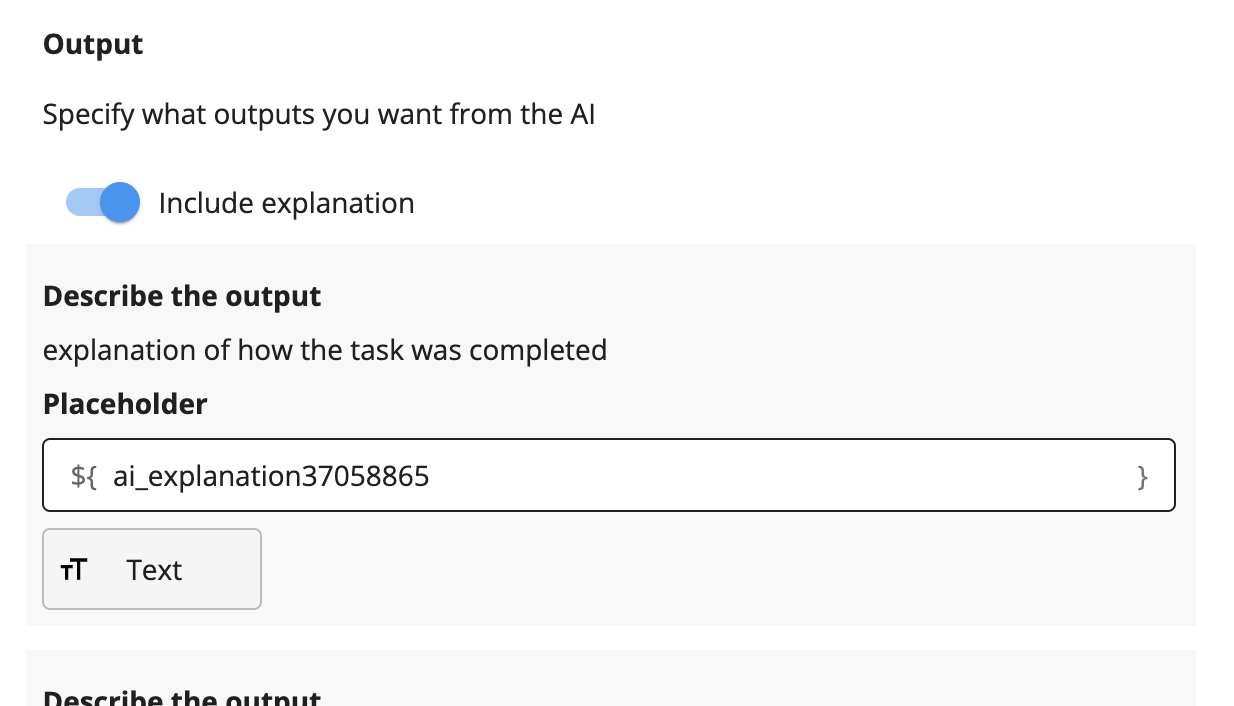

Explanation

By default, the AI can return an explanation for how it performed the requested action. Turn this switch on to output this explanation.

Setting outputs

Describe the output to be returned by the AI. For each output, provide a description for the output and select the data type for the output.